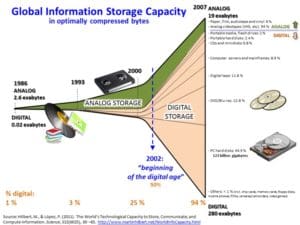

Today’s massively increasing collections of data improve the potential value of data analytics applications like machine learning. How can you extract useful answers and conclusions from the data you have? In industry, the “data warehouse” concept has been used to merge operational data with business data. The basic idea is to collect and organize the data before it is stored, with business intelligence-related uses in mind. If, for example, the plant manager wants to see a daily report on the factory production, the data collections and database design are structured for this purpose.

This contrasts with the “data lake” concept in which all imaginable data is simply collected and modern database programming tools like Hadoop are used to organize the data. It’s not hard to imagine that the data lake concept could collect a veritable junkyard of data in the supply chain. For example: imagine digitizing old plant and warehouse photos; scanning old and new plant design documents; and collecting old and new versions of operating procedures and the control and safety system configurations; collecting production records, raw material purchases, records on shipment transactions, product quality measurements from the lab, and the real-time streaming data from the plant control system. Now, connect to local weather databases; sprinkle in some OSHA, EPA, and local zoning regulations; and don’t forget those old VHS training videos.

Basically, the above data collection is disordered and largely unstructured, has high entropy, and would take work (programming) to make it ordered. Surely there are ways to organize and extract useful information from this junkyard of data. Buried deep in this data, you could potentially find the justification to replace a specific piece of factory equipment that limits production rate or that is responsible for off-spec product.

Just because we have a massive junkyard of data in a data lake does not mean we have the right data to answer questions like: “How do I improve production rate, reduce maintenance costs, or improve product quality in my factory”. Machine learning with neural networks is a promising technology for extracting useful models for data, but typically needs very specific data that is not likely to be found in a data lake.

An IEEE article points out that, in many cases, you need more data than your big data “junkyard” can provide. In this example, the article’s author (Jacques Mattheij) decided to build a factory that sorted tons of mixed Lego blocks he could buy at low cost into sorted Lego blocks he could sell for nearly four times as much. Perhaps he was motivated by the technical challenge more than the business opportunity. The basic idea is to use a USB camera connected to a PC that monitors a conveyor belt. The PC needs to identify each Lego block and use puffs of air to blow parts off the belt into sorted bins that contain a specific part type. The hard problem was to train a machine learning algorithm to correctly identify each unique Lego part. Considering there are more than 1,000 types of Lego blocks, rather than photograph each unique type of Lego block from multiple angles and label each photograph, Jacques found a faster way to train his neural model. Jacques started by creating training sets of some Lego types, but as he used early models to identify mixed Lego types he would correct the many mislabeled parts and use these new labelled photos to further train his model. The next test had even less mislabeled parts, which were easier to correct. This procedure continued until the rate of incorrect identifications became very small.

Organizing the data collection for a specific need (like production reports) is a good fit for the data warehouse concept and established relational database technology. Creating data lakes with massive amounts of data does add considerable flexibility for the types of analysis that can be done. With some programming effort, you could use the data from the lake to build the types of business intelligence applications that have been done with data warehouse concepts, but from a programming point of view you would need a good map of all the data you have, and how to access it with a query. It is possible to use data in warehouses and lakes for machine learning applications, but in many cases the data you will need is just not there.

The conclusion here is that data warehouses, data lakes, and even the real-time and historical supply chain data are all great sources of data to make useful reports, regressions, and discoveries that can help drive business efficiency. However, even the biggest piles of data will not contain all the information needed to build most machine learning applications that can bring automation to the next level. The Lego example shows that to create a useful machine learning application required the creation of new data needed to train the neural networks and some of this data was created during the development process. Successful applications of data analytics and machine learning technology work best when there is a clear vision of a problem to be solved and modern tools are deployed in a thoughtful manner.

Leave a Reply