How can supply chain planning and execution systems evolve to allow prescriptive suggestions for resolving near real time problems? In other words, how can we build a self-driving supply chain? And how do we make use of machine learning to make sure the system gets better and better over time? It is not a simple question.

Machine Learning and Supply Chain Planning

Demand planning applications have had machine learning capabilities for over a decade. Demand planning is a good application for machine learning for two reasons. First, because the measure of success – the forecast accuracy – is clear. Having a clear measure of success sounds easy.

But often, defining success in not easy. Consider a situation where a manufacturer learns of a shortage of a key component. Customers have already been promised products that depend upon that key production input. The supply chain planning engine needs to be rerun to generate a new supply plan.

But in this case the objective can be hard to define:

- Which customers get the full order on time in full?

- Should the company take a margin hit by expediting a shipment or risk a dissatisfied customer’s future business?

- How far out do we push the promise date for a customer?

- Which customers do we short and by how much?

Some suppliers of supply chain planning are looking to “solve” this problem by using pattern recognition to see how planners resolved similar problems in the past and then suggest a similar resolution the next time the problem arises. The more at bats a system has, the faster it gets smarter. If you are doing daily demand plans, and every day you have new data on how well yesterday’s forecast did, you have a system that can improve quickly.

In the replanning problem described above, significant component shortages may only occur a couple times a year. And the resolution that was used in June may be completely different than the one in December. It may take hundreds, but more likely, thousands of at bats before the machine can begin to unravel why a planner did one thing in one situation and a different thing in the next. This form of artificial intelligence just won’t work.

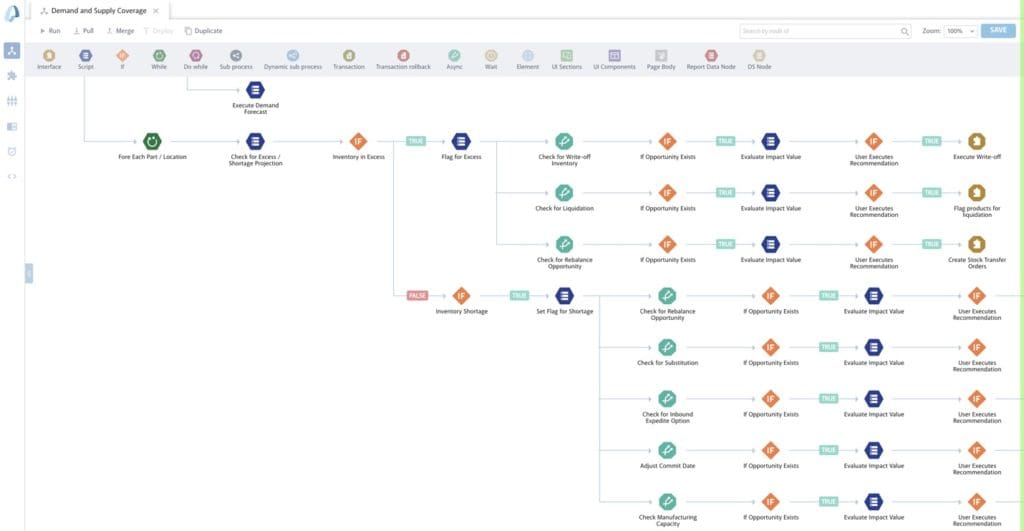

It is possible to build these decision rules, but in a global multinational there can be so many it can become a big project. A work flow engine that allows nonprogrammers to build these rules is a necessity.

The second reason that demand planning is a good application for machine learning is that is has the data it needs to improve. Machine learning is a form of continuous improvement. So, in demand planning the machine learning engine looks at the forecast accuracy from the model, and asks itself if the model was changed in some way, would the forecast be improved. Forecasts are improved in an iterative, ongoing manner.

Architecturally, applying machine learning in supply planning is more difficult. In a demand management application, the system is continuously monitoring forecasting accuracy. That accuracy data in the system allows for the learning feedback loop. Further, demand planners, the people that use the outputs of the system, play a core role in making sure the data inputs stay clean and accurate.

In supply planning, the data comes from a different system or systems. Improving operations can be extraordinarily challenging if the data that holds the answers is scattered among different incompatible systems, formats and processes. And the people responsible for making sure the data put into various systems is accurate don’t use the system outputs; in short, they have less incentive for making sure inputs stay clean. This is a master data management problem.

Middleware Enables a Machine Learning Feedback Loop

A form of middleware/business intelligence must access up-to-date and clean data, analyze it, and then either automatically change the parameters in the supply planning application or alert a human that the changes need to be made. For example, for supply chains to operate efficiently, their lead times need to be kept up to date. Having the right amount of inventory depends upon accurate lead times. Keeping lead times up to date sounds simple. It is not done nearly enough at most companies. And it has huge value. Cognitive solutions are beginning to automate these kinds of updates.

These middleware solutions do exist. I’m most familiar with the solution from OSIsoft, the PI System, which collects, analyzes, visualizes and shares large amounts of high-fidelity, time-series data from multiple sources to either people or systems.

If you can bring together the middleware, supply chain applications with optimization and predictive analytics, and the wide range of decision rules needed for prescriptive analytics, you can create a feedback loop that can take advantage of machine learning. When that happens, the system will get better and better over time. This is just beginning to happen.

I was recently briefed by Fred Laluyaux, the CEO, and Ram Krishnan, Vice President, at Aera Technology. The technology stack I have described is what they are delivering. And it is real. They also sent me a video that contained a presentation that one of their customer’s – Merck GMS – given at a supply chain conference in London.

They describe their technology as having data crawlers. This real-time crawling technology collects, indexes and harmonizes billions of transactions from what can be multiple enterprise and manufacturing systems as well as external data sources. This data is continuously moved, there are thousands of pings per day of the transactional systems, to their multitenant Cloud solution.

Aera then indexes, correlates and normalizes the raw data from all these systems into a single information model. They have over 5000 metrics that are pre-coded that can be used to understand a business. Advanced search makes the entire data set dynamically accessible. At this level, the data can begin to be analyzed. This base layer of the platform is what Merck considers to be their supply chain control tower.

The next layer is what they call their analytics engine. Here is where predictive algorithms and optimization tools that solve both linear and mixed-integer programming problem can be quickly processed using in-memory computing. This sound complex, but it is essentially the kind of supply chain planning applications that have long been available. But the Aera architecture allows the planning engine to solve supply chain problems in short time horizons.

Bridging the Gap Between Supply Chain Planning and Execution

But as I pointed out above, in near real-time, you can’t solve all your problems with optimization. Often a company will need a set of rules that help them make tough trade off decisions. Aera has a work flow engine that helps to create these rules. Users will often be given prescriptive suggestions that they can say “yes” or “no” to. If they say “no”, they select a code that gives the reason why they said no. Then the history is collected that provides the feedback loop that allows the machine to get smarter over time. This is the point at which the system becomes a self-driving supply chain.

I rarely write about technology from a single company. But I was impressed by what I saw and heard. If you like jargon, then we are getting close to having cognitive automation for a self-driving supply chain. If not, this is a cool solution that helps to bridge planning and execution.

Leave a Reply