Many global multinationals accelerated their investments in digitizing data during the pandemic. According to Colin Masson, a director of research at ARC Advisory Group, the opportunity to mine these vast quantities of data to achieve business value is “NOW.” Mr. Masson recently wrote the report “Industrial-grade AI: Transforming Data into Insights and Outcomes.” Mr. Masson leads ARC’s research on industrial AI and data fabrics.

Mr. Masson points out the “challenges are not just about the volume, but also the complexity and fragmentation of data generated by sensors, machines, and smart factories. This data is often disconnected and scattered across various applications, making it difficult to harness for insights and decision-making.”

Frederic Laluyaux, the CEO of Aera Technology, agrees with this assessment. Business cycles are compressing. In the supply chain arena, the need to make course corrections is exploding. When you combine the volume, complexity, and speed with which decisions need to be made and executed, the current way companies manage this is unsustainable. Decisions need to be digitized.

The data needed to support AI digitization can be very granular. Mr. Masson of ARC points out, “Each AI use case requires specific datasets and may necessitate different tools and techniques.” For instance, advanced factory scheduling solutions use predictive maintenance inputs, which rely on sensor data to forecast equipment failures. Short-term forecasting relies on POS and other forms of downstream data. Warehouse management systems rely on RF scans of locations and products. Mobile robots use camera data, while autonomous trucks use truck sensors, LIDAR, and camera data. Real-time risk solutions mine vast quantities of online data using natural language processing. “A ‘big bang’ approach, applying a one-size-fits-all AI solution, is not viable in an environment where industrial-grade solutions are needed to meet health, safety, and sustainability goals, Mr. Masson points out.

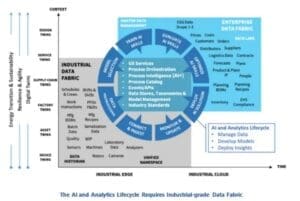

This is why data fabrics are necessary. A data fabric refers to an architecture that supports a unified approach to data management. Data fabrics need to work across an AI and Analytics lifecycle. This is a critical framework that guides the transformation of “good enough” data into insights and actions. Mr. Masson says the analytics lifecycle includes:

Managing Data: Creating a business-ready analytics foundation by integrating and standardizing data across systems.

Developing Models: Building and scaling AI models in a manner that ensures they are reliable and understandable.

Deploying Insights: Operationalizing AI throughout the business to automate processes and empower decision-making by the right people at the right time.

This lifecycle is essential where timely and accurate decisions can significantly impact supply chain efficiency, safety, customer service, and profitability.

Data fabrics can simplify the AI and Analytics lifecycle by weaving together a unified layer for data management and integration across a company’s IT environment. However, existing enterprise data fabrics may not be “industrial grade” enough for many AI use cases. They often require a “big bang” approach to migrating and standardizing data in cloud-based data lakes. They may not handle the complex data types encountered on the edge, which are often unstructured, time-sensitive, and critical for real-time decision-making.

According to Mr. Masson, “a new category of industrial-grade data fabrics will eventually emerge to meet the unique needs of industrial settings, and software alliances are already being forged to bring them to fruition.” These new data fabrics will need to go beyond traditional enterprise data fabrics, which are optimized for cloud environments, to be able to embrace complex supply chain data. These new fabrics will promote the development of new models that can operate effectively on the edge, in the enterprise cloud, or across the extended supply chain.

Currently, suppliers of supply chain technologies support 25 AI-based supply chain use cases. Most of these use cases are based on a more siloed approach to data management.

However, Aera Technology has built solutions—what they call skill sets—on top of a data fabric that allows a solution provider to embrace a broader set of business use cases. Aera refers to its solutions as skill sets because this is a toolset approach to automating decisions.

Mr. Laluyaux says Aera has spent hundreds of millions of dollars on building their platform. In the first quarter, Aera loaded 1.3 trillion rows of data into the platform. “So far this year, we have digitized over 25 million recommendations.” He shared the names of some of their customers on an off-the-record basis. They include some of the largest companies in the world.

Aera has developed something called a “data quality skill.” The system tells the user about the completeness, accuracy, and consistency of data elements needed to support a wide range of automated decisions.

There are several steps to automating decisions. “If you want to digitize decision-making, you need 100% of the information required for a decision to be made available in a normalized data model. We built a technology that allows us to crawl the transactional systems. So, we deploy an agent on an SAP environment. The agent selectively pushes data to the Aera data model.” Not all the transactional data, just the data required to calculate a metric or make a decision. These agents help to ensure the core parameters in planning, like lead times, are accurate and up to date.

This data doesn’t persist forever. At some point, it has been used for its intended purpose, and it disappears. Similarly, data needs to be refreshed at different speeds. For capable-to-promise, one of their clients has the agents refresh the needed data every 15 minutes. Aera’s use cases mainly rely on enterprise master data rather than edge data.

Secondly, “if you want to digitize a decision,” Mr. Laluyaux explained, “you need to have a decision logic.” That logic could be as simple as heuristics – if A happens, then do B. Or it could involve machine logic or optimization.

Thirdly, the decision to be executed is then pushed back to the relevant application, whether that be a transportation management system or a planning solution. The system “builds a permanent memory of all the decisions that are made on a given topic, Aera’s CEO explained. “That allows the system to learn.”

“If I give you a fully documented recommendation, going to the lowest level of detail of logic, and I capture your reaction to that decision,” Mr. Laluyaux said, then you have a foundation to build automated decision-making on top of.

Mr. Laluyaux admits that not all decisions can be automated. Situational decisions usually cannot. But a company has tens of thousands of people doing repetitive work, which is the low-hanging fruit. In short, Aera builds “skills” by building a corresponding number of models. Those models can be big or small and simple or complex. The solution then does fast calculations as key data changes to develop a better solution to a problem.

The platform has thresholds that say, for example, “If the dollar value of orders changes a little, that doesn’t matter. Don’t recalculate the forecast. But if the demand changes by 10% for the coming month, then the forecast should be recalculated.” The user sets those thresholds.

In short, Aera’s approach to supply chain management is based on a data fabric platform. Some of “our clients were saying we should sell this separately. I refuse,“ Mr. Laluyaux asserted. “Our vision for our technology took awhile to achieve. And that vision wouldn’t have been achieved if the data fabric core was separated from the decision engine.”